What is Round Trip Time - RTT vs TTFB vs Latency

Round Trip Time (RTT) is a metric that measures the time taken by a network packet to travel from sender to receiver and then back from receiver to sender.

RTT = Latency (Client to Server) + Server Processing Time + Latency (Server to Client)

RTT is a great metric to evaluate network performance but not the best at measuring how well the website is served to the end users. TTFB is a better metric for understanding server performance. Nevertheless, RTT directly influences TTFB and hence we must ensure that our website has a low RTT.

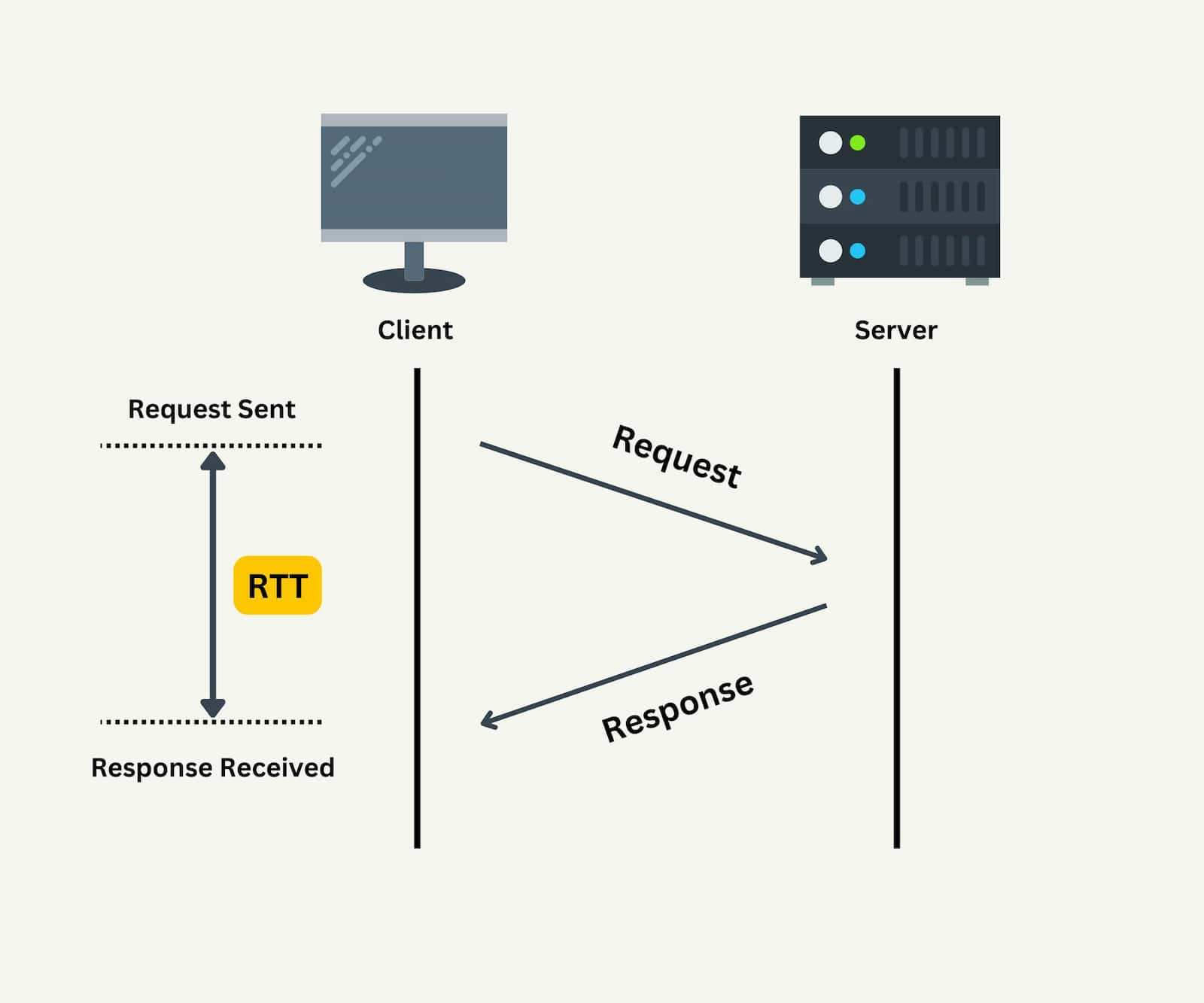

Here's a quick illustration of Round Trip Time.

In the above illustration, you can see that the time period between the Request sent from the client to the Response received by the client is the Round Trip Time. Apart from the latency, it might also include a little server processing delay.

When measuring RTT, we ideally send a request such as ping that involves minimal processing and resources. This ensures that we can minimize the server processing and our RTT value mainly comprises of latency at both ends.

How to Measure Round Trip Time

To measure Round Trip Time, you can either open Terminal on Mac or Linux or open Command Prompt/Powershell on Windows. Use the ping command for any domain name or IP.

ping example.com

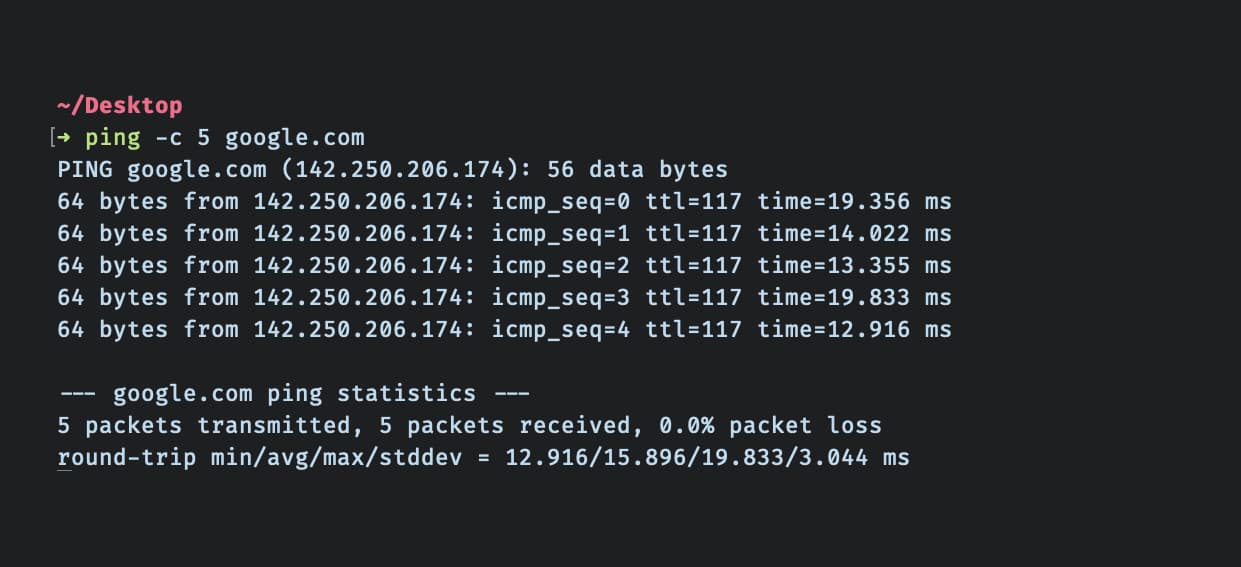

Here's an example showing the ping command for google.com.

You can use -c to limit the number of requests you want to send. Once the process is done, you'll get a summary of the RTT. It will list the minimum, average, maximum, and standard deviation values for the RTT. The farther you are from the physical server (or CDN's point of presence), the higher will be the RTT value.

What Factors Affect Round Trip Time

1) Physical Distance

The longer the physical distance between the client and the server, the higher will be RTT. This applies to latency and TTFB as well.

2) Network Traffic

If any part of the network is congested due to high usage, there will be delays as the packets have to wait in a queue.

3) Network Hops Count

The more routers or servers a packet has to travel through, the higher will be the RTT. This is because each device will take some time to process it.

4) Server Response Time

Since Round Trip Time consists of the time when a request is sent by the client and the time when it is received back by the client, it adds some delay as the server needs to process the request in order to respond back. While measuring RTT, we try to minimize this time by using a simple request such as ping. However, for more complex requests, the server processing time is high and adds to the RTT value.

5) Transmission Medium

Optical Fiber offers the lowest RTT followed by other Wired connections such as Copper Cables. Wireless networks such as Wi-Fi, 5G, 4G, and Satellite Internet such as Starlink will have higher RTT.

6) Routing Paths

If the packet takes a more complex path over the internet, it could lead to a higher RTT. Some CDN Providers offer services that optimize the network paths.

7) Packet Size

The lower the size of the packet, the faster it can be transmitted.

RTT vs TTFB vs Latency

It is easy to get confused between these three as they all are very similar. To understand their difference better, let's start with the easiest one, i.e., latency.

Latency is only a one-way delay. It is the time taken by a network packet to travel from sender to receiver (or from receiver to sender).

RTT (Round Trip Time) is the two-way delay. It is the time taken by a network packet to travel from sender to receiver and then back to sender from the receiver.

TTFB (Time to First Byte) is a metric that is quite similar to Round Trip Time but it is measured for an HTTP request and includes the server processing delay along with the round trip time. SpeedVitals also offers a TTFB Test that you could check out.

RTT & Web Performance

Round Trip Time is an important metric for Web Performance as it directly influences TTFB which in turn affects various Web Vitals including Largest Contentful Paint (LCP) and Interaction to Next Paint (INP). Let us discuss how RTT affects Core Web Vitals and how to measure RTT for real users.

Impact on Core Web Vitals

Time to First Byte (TTFB) metric consists of Round Trip Time as well as Server Processing Time. It plays an important in the calculation of First Contentful Paint (FCP) and Largest Contentful Paint (LCP). Even if your website has a fast backend/server and your Frontend is well-optimized, you can still have a poor LCP score if the Round Trip Time is high.

Since a high RTT value increases TTFB, this results in a delay until all the critical resources are loaded and the website becomes interactive. This can also result in slower event handling and higher input latency. All of these factors can increase INP (Interaction to Next Paint), which is a new Core Web Vitals metric.

The only metric RTT doesn't have a direct impact on is Cumulative Layout Shift (CLS). Nevertheless, RTT has a direct impact on 2/3rd of Core Web Vitals.

We also have a free tool to check out your Core Web Vitals score. You can check it out on Core Web Vitals Checker.

Check RTT using CrUX API

The Chrome User Experience Report (CrUX) has recently started including the RTT values. You can learn more about the same on the metrics page of CrUX API Documentation.

However, this RTT value is measured for an HTTP request and not for a simpler request like ping. This would be ideal in the context of Web Performance but it could differ from measuring RTT via ping, and also because it is being measured for real users.

How to Reduce Round Trip Time

Our article on reducing TTFB covers both aspects (Reducing Server Response Time and latency). Some of the suggestions in that article will be applicable to reducing RTT as well.

Here are some suggestions that can help reduce the RTT of your website.

1) Use a CDN

As discussed earlier, using a CDN can dramatically reduce both RTT and TTFB as it makes copies of your website resources in multiple locations. When a user requests your website, the CDN serves the user through its closest Point of Presence.

You can refer to our article to find out the best CDN.

2) Consider using a Load Balancer

A load balancer can distribute the traffic to your origin server to multiple nodes and helps prevent network congestion during traffic spikes. Moreover, having multiple servers across the globe can reduce network delay as the content gets served from the node closest to the user (in case of no CDN, dynamic content, or Cache Miss).

3) Optimize Network Paths

Some CDNs offer features or add-on services that can optimize network paths by smart routine. Some of the popular services include: